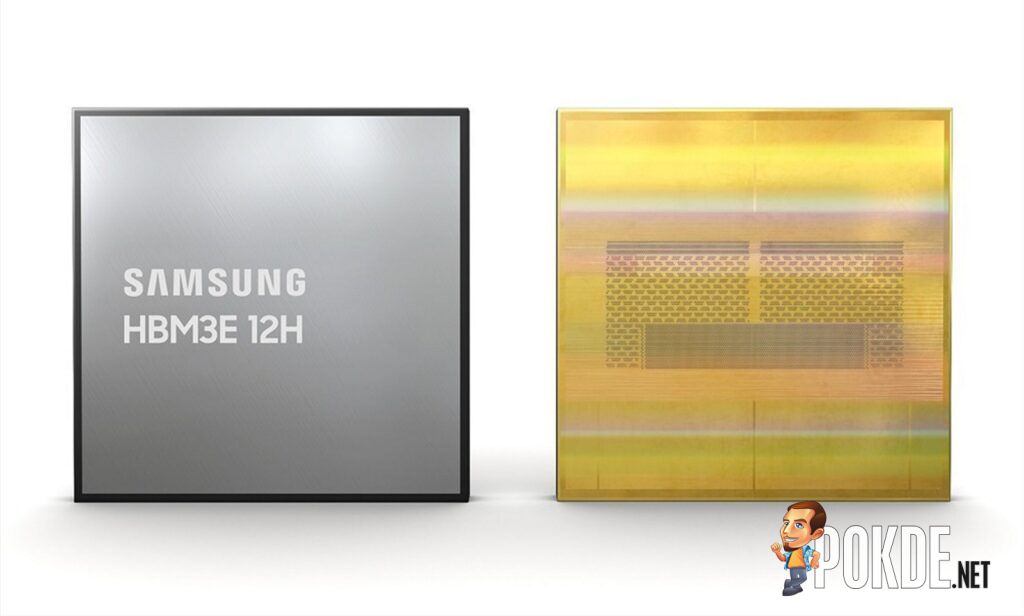

Samsung Unveils Advanced HBM3E 12H DRAM for AI

Samsung has recently made waves in the tech world with its announcement of the HBM3E 12H DRAM, featuring the revolutionary TC NCF technology. For those diving into the intricacies of acronyms, this unveiling marks a significant advancement in high bandwidth memory (HBM) technology.

Samsung HBM3E 12H DRAM

To break it down, HBM, or high bandwidth memory, does precisely what its name suggests, providing enhanced data transfer capabilities. In October, Samsung introduced the HBM3E Shinebolt, an upgraded version of the third generation HBM, capable of achieving an impressive 9.8Gbps per pin and an overall package bandwidth of 1.2 terabytes per second.

Now, let’s delve into the “12H” component. This refers to the number of chips vertically stacked in each module, totaling 12 in this instance. This stacking method enables the accommodation of more memory within a single module. Samsung has achieved an impressive 36GB with its 12H design, showcasing a 50% increase compared to an 8H configuration. Remarkably, despite the increased capacity, the bandwidth remains at a formidable 1.2 terabytes per second.

The final piece of the puzzle is TC NCF, standing for Thermal Compression Non-Conductive Film. This is the material strategically layered between the stacked chips. Samsung has successfully reduced its thickness to 7µm, allowing the 12H stack to maintain a similar height to an 8H stack. This innovation ensures compatibility with existing HBM packaging while offering enhanced thermal properties for improved cooling. Moreover, the new HBM3E 12H DRAM boasts improved yields, further solidifying its appeal.

In terms of applications, the demand for such cutting-edge memory is most evident in the field of artificial intelligence (AI). Samsung’s estimates suggest that the increased capacity of the 12H design will accelerate AI training by 34%, enabling inference services to handle more than 11.5 times the number of users.

As AI accelerators, like the NVIDIA H200 Tensor Core GPU, continue to drive demand for high-performance memory, companies such as Samsung, Micron, and SK Hynix are positioning themselves as key players in this lucrative market.

Pokdepinion: With the potential to reshape the landscape of AI technology, the HBM3E 12H DRAM sets the stage for a new era in high-performance computing.